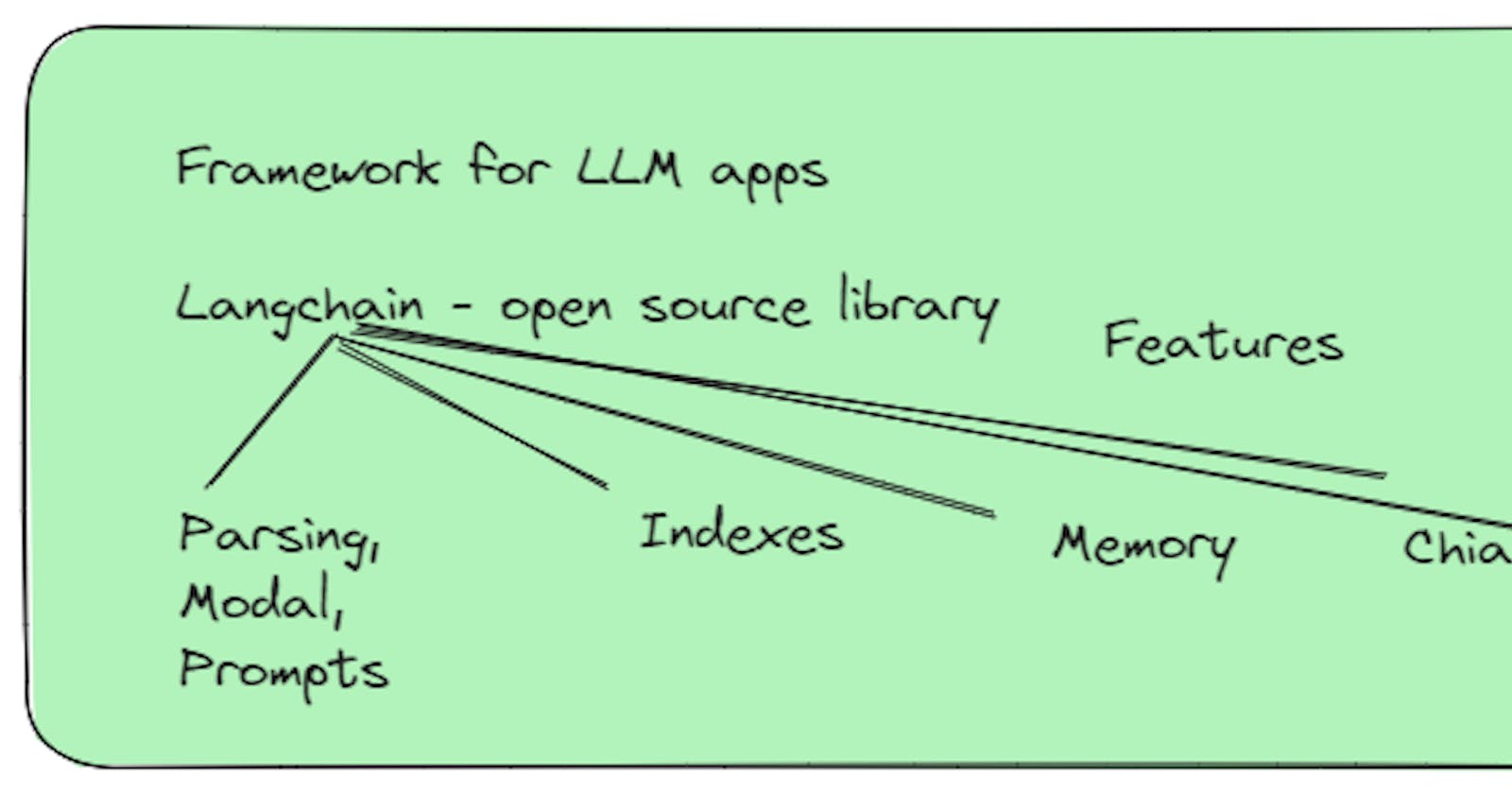

To Build better apps, we need always a framework. If you want to build LLM apps then Langchain is a framework that will provide all the necessary needs for LLM apps.

Langchain Framework

Langchain is open source for LLM apps.

Currently, Langchain provides Python and Javascript (typescript) libraries. It focuses on composition and modularity and is a common way to combine.

The major components provided by Langchain are:

Models - We can use any LLM models.

Prompts- we can pass prompts to the model.

Parse - we can parse LLM output before replying to users.

Indexes - It can index large chunks of text.

Memory- it can memorize the previous conversation of users.

Chain - It can perform a sequence of operations on your data.

Evaluation - To evaluate your app whether it is meeting the defined criterion.

Agents- Give instructions to LLM to perform some tasks by allowing using external tools.

Models, Prompt and Parsing

We can use any kind of modal and we can provide some instruction as prompt.

if we use the Open AI model - all response is a string format but looks like a Python dictionary. By using Langchain , it will automatically parse outputs to the dictionary so that we can use it as a key to get a particular answer.

Langchain provides Prompt Template.

Why use it?

Sometimes Prompts can be long and detailed.

Langchain reuse good prompts whenever you want.

Langchain provides prompts for common operations.

Memory

Sometimes or most of the time we need the LLM model to remember the previous part of the conversation and feed that into language.

Since LLM models are stateless and each transaction is independent. Chatbots appear to have memory by providing the full conversation as a context.

But memory can be very long so more tokens and if more tokens then more costly.

So Langchain provides several kinds of memory to store and accumulate the conversation.

Memory types:

ConversationBufferMemory

ConversationBufferWindowMemory

ConversationSummaryMemory

Chains

To carry a sequence of tasks based on your data. Langchain provides chains as one of the core features.

LLM chain is the very basic type of chain

Sequential Chain - The idea to combine all chains where the output of one chain is the input of the next chain.

Router chain - chaining based on some routing.

Question and Answering based on Documents

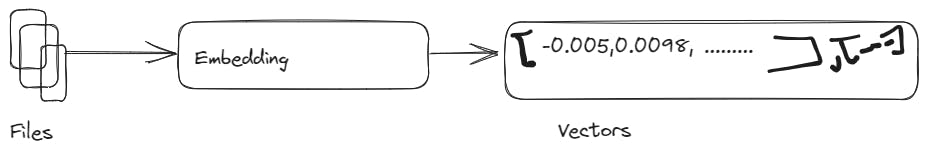

One of the very common applications Q &A based on uploaded documents. We can store the documents in a vector store and can upload documents using the Document loader provided by Langchain.

But How can answer big files as we know that every LLM model has max tokens limit? So for these reasons, Embedding and Vector store comes into the picture.

Embedding Vector captures contents/meaning and text with similar content will have similar vectors.

Evaluations

We always want to know if the LLM apps that we are developing must meet the criteria.

Agents

One of the cutting and very new technology. By using Agents we can allow the model to use external tools for some specific task.

Agents can use any custom tool defined by the user, API, web search etc.

I hope this article gives some information on the Langchain framework.

In my next article, will implement and develop some LLM apps.